langsmith-mcp-server

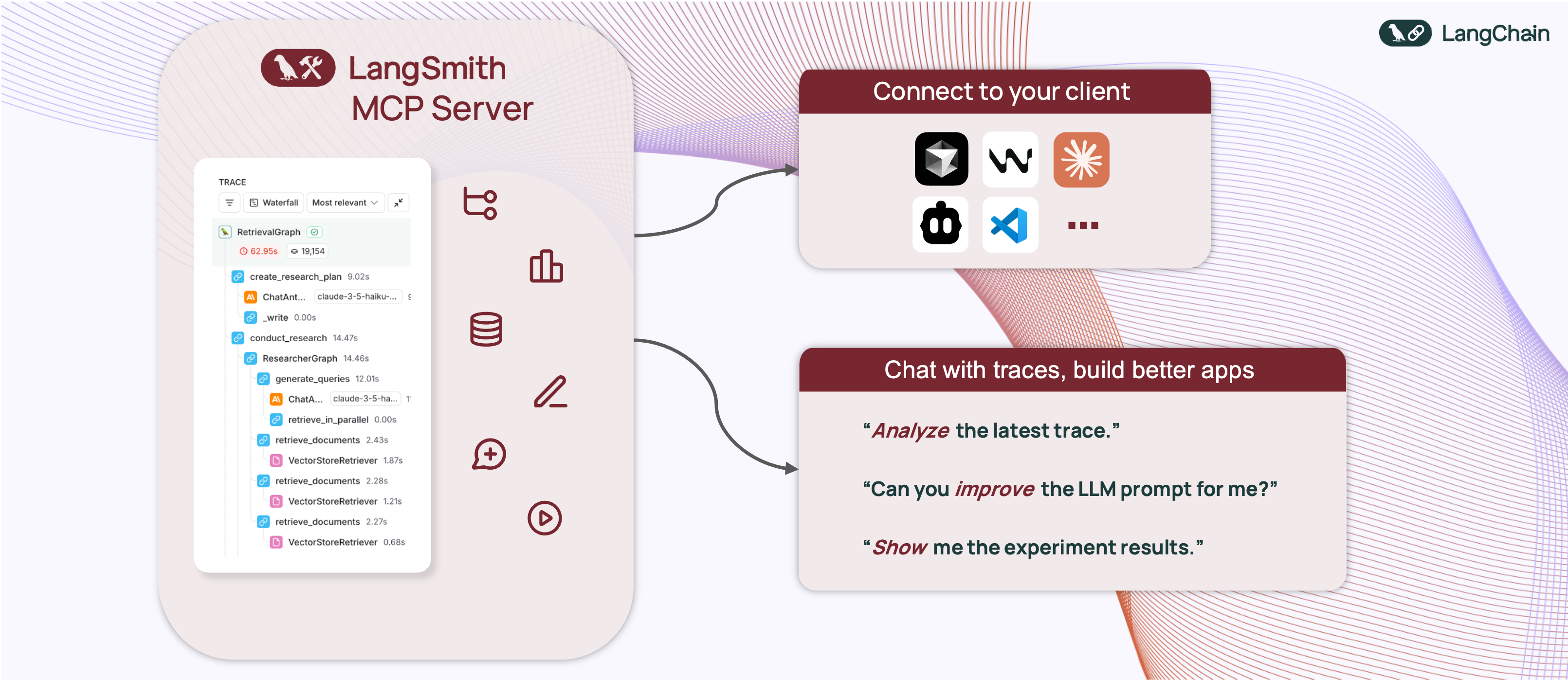

LangSmith MCP Serverは、言語モデルとLangSmithプラットフォームを接続するためのサーバーであり、会話履歴やプロンプトの管理を可能にします。これにより、会話の追跡や分析が容易になり、開発者はより高度な機能を利用できます。現在も開発中ですが、将来的には多くの機能が追加される予定です。

GitHubスター

28

ユーザー評価

未評価

お気に入り

0

閲覧数

39

フォーク

11

イシュー

5

🦜🛠️ LangSmith MCP Server

[!WARNING]

LangSmith MCP Server is under active development and many features are not yet implemented.

A production-ready Model Context Protocol (MCP) server that provides seamless integration with the LangSmith observability platform. This server enables language models to fetch conversation history and prompts from LangSmith.

📋 Overview

The LangSmith MCP Server bridges the gap between language models and the LangSmith platform, enabling advanced capabilities for conversation tracking, prompt management, and analytics integration.

🛠️ Installation Options

📝 General Prerequisites

Install uv (a fast Python package installer and resolver):

curl -LsSf https://astral.sh/uv/install.sh | shClone this repository and navigate to the project directory:

git clone https://github.com/langchain-ai/langsmith-mcp-server.git cd langsmith-mcp-server

🔌 MCP Client Integration

Once you have the LangSmith MCP Server, you can integrate it with various MCP-compatible clients. You have two installation options:

📦 From PyPI

Install the package:

uv run pip install --upgrade langsmith-mcp-serverAdd to your client MCP config:

{ "mcpServers": { "LangSmith API MCP Server": { "command": "/path/to/uvx", "args": [ "langsmith-mcp-server" ], "env": { "LANGSMITH_API_KEY": "your_langsmith_api_key" } } } }

⚙️ From Source

Add the following configuration to your MCP client settings:

{

"mcpServers": {

"LangSmith API MCP Server": {

"command": "/path/to/uv",

"args": [

"--directory",

"/path/to/langsmith-mcp-server/langsmith_mcp_server",

"run",

"server.py"

],

"env": {

"LANGSMITH_API_KEY": "your_langsmith_api_key"

}

}

}

}

Replace the following placeholders:

/path/to/uv: The absolute path to your uv installation (e.g.,/Users/username/.local/bin/uv). You can find it runningwhich uv./path/to/langsmith-mcp-server: The absolute path to your langsmith-mcp project directoryyour_langsmith_api_key: Your LangSmith API key

Example configuration:

{

"mcpServers": {

"LangSmith API MCP Server": {

"command": "/Users/mperini/.local/bin/uvx",

"args": [

"langsmith-mcp-server"

],

"env": {

"LANGSMITH_API_KEY": "lsv2_pt_1234"

}

}

}

}

Copy this configuration in Cursor > MCP Settings.

🧪 Development and Contributing 🤝

If you want to develop or contribute to the LangSmith MCP Server, follow these steps:

Create a virtual environment and install dependencies:

uv syncTo include test dependencies:

uv sync --group testView available MCP commands:

uvx langsmith-mcp-serverFor development, run the MCP inspector:

uv run mcp dev langsmith_mcp_server/server.py- This will start the MCP inspector on a network port

- Install any required libraries when prompted

- The MCP inspector will be available in your browser

- Set the

LANGSMITH_API_KEYenvironment variable in the inspector - Connect to the server

- Navigate to the "Tools" tab to see all available tools

Before submitting your changes, run the linting and formatting checks:

make lint make format

🚀 Example Use Cases

The server enables powerful capabilities including:

- 💬 Conversation History: "Fetch the history of my conversation with the AI assistant from thread 'thread-123' in project 'my-chatbot'"

- 📚 Prompt Management: "Get all public prompts in my workspace"

- 🔍 Smart Search: "Find private prompts containing the word 'joke'"

- 📝 Template Access: "Pull the template for the 'legal-case-summarizer' prompt"

- 🔧 Configuration: "Get the system message from a specific prompt template"

🛠️ Available Tools

The LangSmith MCP Server provides the following tools for integration with LangSmith:

| Tool Name | Description |

|---|---|

list_prompts |

Fetch prompts from LangSmith with optional filtering. Filter by visibility (public/private) and limit results. |

get_prompt_by_name |

Get a specific prompt by its exact name, returning the prompt details and template. |

get_thread_history |

Retrieve the message history for a specific conversation thread, returning messages in chronological order. |

get_project_runs_stats |

Get statistics about runs in a LangSmith project, either for the last run or overall project stats. |

fetch_trace |

Fetch trace content for debugging and analyzing LangSmith runs using project name or trace ID. |

list_datasets |

Fetch LangSmith datasets with filtering options by ID, type, name, or metadata. |

list_examples |

Fetch examples from a LangSmith dataset with advanced filtering options. |

read_dataset |

Read a specific dataset from LangSmith using dataset ID or name. |

read_example |

Read a specific example from LangSmith using the example ID and optional version information. |

📄 License

This project is distributed under the MIT License. For detailed terms and conditions, please refer to the LICENSE file.

Made with ❤️ by the LangChain Team